Chapter I: The birth of Parquet

This is the first part of my 3-chapter blog post: Ten years of building open source standards

Chapter I: The birth of Parquet

Prologue

15 years ago (2007-2011) I was at Yahoo! working with Map/Reduce and Apache Pig, which was the better Map/Reduce at the time.

The Dremel paper just came out and, as everything I worked with seemed to be inspired from Google papers, I read it. I could see it applying to what we were doing at Yahoo! and this was to become a big inspiration for my future work.

15 years ago (2007-2011) I was at Yahoo! working with Map/Reduce and Apache Pig, which was the better Map/Reduce at the time.

The Dremel paper just came out and, as everything I worked with seemed to be inspired from Google papers, I read it. I could see it applying to what we were doing at Yahoo! and this was to become a big inspiration for my future work.

Pig has now fallen out of grace and it’s been mostly replaced by Apache Spark for many distributed computing needs. However, it is the project where I earned my first committership at the ASF and it holds a special place in my heart. Starting as a nimble caterpillar user of the project, I progressed through all the stages of metamorphosis, building my coccoon as a contributor and finally emerging as a maintainer. In the ASF world, this happens by being invited - first to become a committer and then to join the Project Managment Comittee. Eventually, I was elected the chair of the project for a year. I learned a lot about open source foundations and how their different roles work during that time.

This is also a great way to grow your network. Through the Pig community I met engineers at companies like Twitter, Linkedin and Netflix solving similar problems.

Through those connections, I joined the data platform team at Twitter in 2011, leading the processing tools team during its explosive growth during the pre-IPO years.

The context

The data platform at Twitter had two main tools in its belt. On one end, there was Hadoop, which was a highly scalable, high throughput, data processing juggernaut. But it was also fairly high latency, Map/Reduce being something of a brute force approach to data processing. You’d launch your query and go get coffee before it completed.

The data platform at Twitter had two main tools in its belt. On one end, there was Hadoop, which was a highly scalable, high throughput, data processing juggernaut. But it was also fairly high latency, Map/Reduce being something of a brute force approach to data processing. You’d launch your query and go get coffee before it completed.

On the other end, there was Vertica, a state-of-the-art Massively Parallel Processing database, leveraging columnar storage and vectorization to achieve low latency results. However Vertica was much more expensive and didn’t scale as well as Hadoop, causing constant questions around what data would make it there. It never had all the datasets nor the full time span available in Hadoop.

The idea

This raised the question of how to make Hadoop more like Vertica. It looked like the main problem was that the Hadoop abstractions were a bit too low level and geared towards building a search index.

Hadoop provides Map/Reduce on top of a distributed file system while Vertica is a distributed query engine on top of a columnar storage.

During that time, the data platform team at Twitter had a paper reading group. People would read papers and present them to the group if they were interesting. At the time I re-read the Dremel paper and presented to the group. I also read interesting papers that described how those MPP databases work: C-Store, Vertica, Monet-DB and others. This led to a better understanding of distributed query engines and the benefits of column stores.

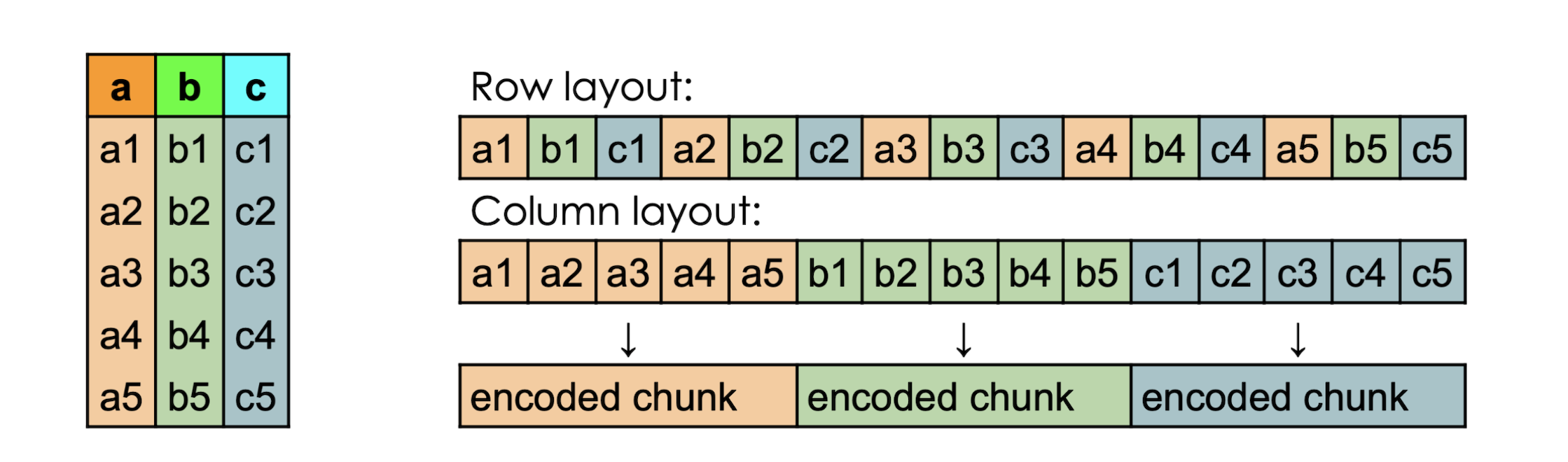

What’s a columnar layout

When you think of a table representation, it is two dimensional with columns and rows. However when it’s physically stored on a disc it has to be aranged as a linear, one dimensional succession of bits.

Organizing the table in a row layout means writing each row one after another. This interleaves data of different types, as you write a value for the first column in the first row, followed by the value of the second column of a different type in the first row. And so on, one row at a time.

In a column layout, you write all the values of the first column for all the rows first, then the values for the second column, and so on.

One of the benefits is that when you need to retrieve only a subset of the columns, which is very common, you can much more efficiently scan them from the disk in big chunks rather than doing a lot of small seeks. Another benefit is that the data compresses better because you can encode together values of the same type that are much more homogenous.

Red Elm

That was it, On my shuttle ride to and from the office, I started prototyping something that would make Hadoop more like Vertica and, like any good project, it first needed a name. Twitter had a tendency to name everything after birds. Trying to be clever, I was looking for an anagram of Dremel to name my project. Emerald was already taken, so I picked Red Elm, and since birds live in trees, this sounded like a good choice. This was also quite ambitious as it implied that I was going to implement all of Dremel, not just the storage format, but that didn’t quite happen and that’s for the better.

I took inspiration from the existing formats I could find (TFile, RCFile, CIF, Trevni) and the context of schema definition at Twitter (Thrift and Pig) and, over the summer of 2012, I started implementing the column spliting algorithm described in the Dremel paper.

Looking for partners

To make this project sustainable, it needed to be integrated into the rest of the open source data stack. This could not be a proprietary Twitter data format as that would have required doing an enormous amount of work to integrate with all the tools we used. And after that, even more work to keep it compatible over time as well as integrate it with future tools we wanted to use. No need to say, that would have been way more work than we would have been able to do.

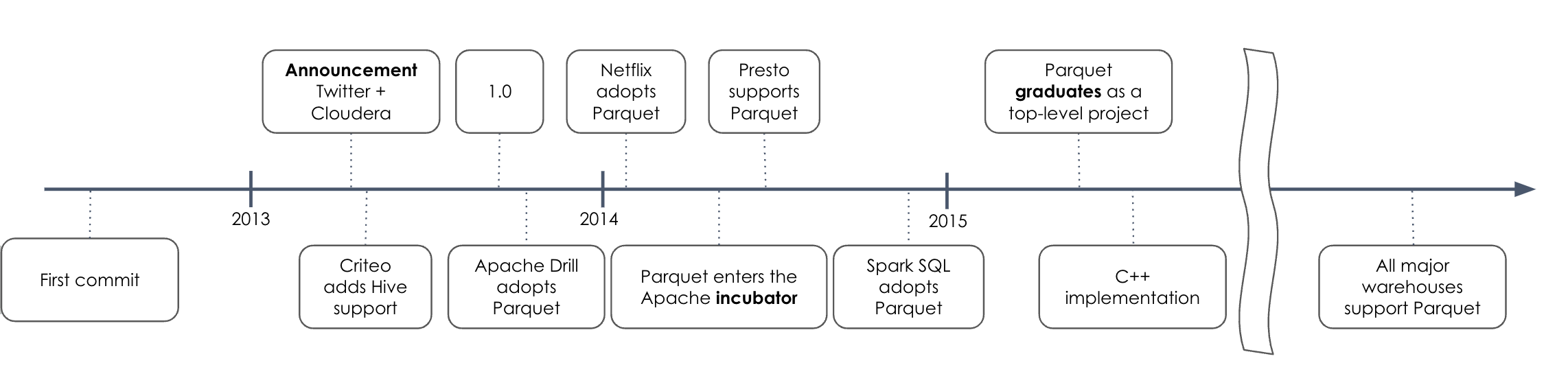

So I started looking for partners. I tweeted about implementing the Dremel paper and how I found an error in one of the figures. That led to connecting with the Impala team at Cloudera who were also prototyping a columnar format.

Obviously, since this was a common need for the Hadoop ecosystem, I was not the only one looking for a solution. We met and it turned out we had complementing qualities. I was bringing compatibility with the JVM ecosystem and they were bringing a distributed query engine that generated native code using LLVM. We merged our designs: I implemented the Java side, they implemented the native reader integrated in Impala, and that became Parquet. We picked a name that would evoke the bottom layer of a database with an interesting layout.

We published an announcement that Twitter and Cloudera were collaborating, which meant it was not just one company. Quickly, several companies started to become interested. Criteo, an ad targeting company in Europe, built Hive support, Netflix adopted Parquet and built Presto support, and Apache drill adopted Parquet as a baseline for storage. Then we entered the incubator to officially become part of the Apache Software Foundation as a top level project.

Once SparkSQL was built on top of Parquet, it quickly reached escape velocity. Continuing on that trajectory, today it’s integrated in all major warehouses - and you can even read Parquet from Excel if you want.

lessons learned

Every contributor becomes a stakeholder

This is true in particular for projects defining a standard format - especially one that is used by other communities made of people building projects on top. To ensure success and community growth, every contributor becomes a stakeholder. That means they don’t only contribute and move on; instead, they have a voice and their opinion matters.

We seek their opinion and feedback. We make sure their needs are met. They now have skin in the game and have an impact on the future direction. Not everybody will use that power, but those who do, will now also exhibit greater responsibility and contribute meaningfully.

The snowball effect

Sometimes, building an open source project and supporting its community feels like you’re pushing a boulder uphill. But, in reality, the mechanics are quite different. It’s more like a snowball.

Instead of new contributors just helping you push (without doing much to address the risk of slipping backwards) they are actually creating momentum. There’s a real snowball effect where, as your project grows, it accelarates. Eventually, it reaches an inflection point and gains enough speed that it reaches escape velocity. It has gone out of control, but on it’s way up. Today, I could not stop the Parquet project even if I wanted to.

Open source comes in all shapes and sizes

The most basic definition of open source is the code is available for you to read, but that does not mean you can use it the way you want.

Licenses are for defining what you can use the code for and establishing the rights and constraints that come with it. There are very different types of open source licenses. All of them will tell you that you’re on your own if you shoot yourself in the foot, and then will proceed to restrict use.

The ASL is very permissive. It mainly protects attribution: you have to give credit if you’re using it. You can modify it however you want, but you have to clearly state so. You can’t redistribute something you have modified and call it the same name. GPL is more restrictive, often described as viral: if you build something on top of it, your derivitave work also has to be released as open source under the GPL.

Governance clarifies who can make decisions about the project and how someone can join the group that makes decisions. A project can have an open license, but still, as a user, you have no control over the direction of the project.

Good governance clarifies how to contribute, how to become a committer, and how to influence the direction and have a say on important decisions.

Some projects are owned by companies and decisions are made by their employees, while others allow anyone to become a maintainer.

The last part is clarifying who can modify the governance of the project. Can the license change? Who effectively controls how the project is maintained?

Being part of an Open Source Foundation is a way to assign this right to a third party and guarantee a level of neutrality. Two important foundations are the ASF and the Linux Foundation (and in particular the CNCF and the LFAI&Data). They guarantee that the license of the project is never going to change, and enforce rules to maintain security processes and keep projects open and inclusive.

You don’t need to be part of those foundations to have good governance, but foundations enforce a set of best practices. What’s important is the project being up front about how it can be used and how decisions are made. All shapes and sizes are fine as long as expectations are set correctly.