Chapter II: From Parquet to Arrow

This is the second part of my 3-chapter blog post: Ten years of building open source standards

Chapter II: From Parquet to Arrow

Connecting the dots

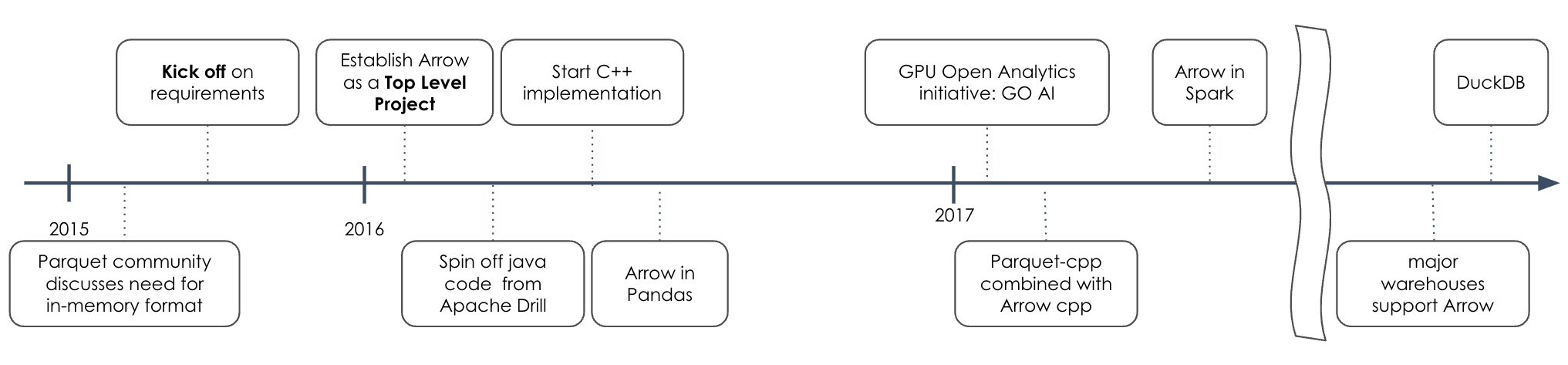

In 2015, a discussion started in the Parquet community around the need for an in-memory columnar format. The goal was to enable vectorization of query engines and interoperability of data exchange. The requirements were different enough from Parquet to warrant the creation of a different format, one focused on in-memory processing.

As we started clarifying exactly what it meant to create an in-memory columnar format, it became clear to me that it looked awfully similar to the in-memory representation that the Apache Drill project had built for its own purpose, so I brought them in the discussion.

I had spent some time with the creators of Drill, discussing how to best read from Parquet into their in-memory representation and facilitating Parquet adoption within their project. As I found myself explaining Dremel’s definition and repetition levels to the Drill team and others, I figured I might as well do it in writing on the Twitter Engineering blog. I reproduced the “Dremel made simple with Parquet” blog post here.

Vectorization in query engines

The need for an in-memory columnar format comes from vectorization in databases. As computer architecture had evolved, query execution needed to adapt. MonetDB is a famous research database seminal to vectorization research. There are two main characteristics of CPUs that lead to this approach.

First, CPUs do not just execute one instruction after another as they used to. To reach faster clock speed and leverage more of their transistors at the same time, they start executing future instructions before the previous one is finished. This is called pipelining. Depending on the CPU design, each instruction can be broken in many steps, from a few cycles to a few dozens. Thus instruction execution is staggered and happening in parallel, increasing speed of execution as you don’t have to wait for the previous instruction to finish to start the next one.

However there’s an obvious caveat to this. Whenever the next instruction depends on the result of the previous one, you must wait for that result to know what the next one is going to be and start it, wasting as many cycles as your pipeline is deep in the process. This happens whenever you have a branch instruction in your logic, which can be an if statement, a loop branch or a virtual method call. Naturally, code has a lot of branching in it, so to take more advantage of pipelining, instead of waiting, CPUs will guess which branch is going to happen and start executing it. If they’re wrong, they discard the result and it’s the same as waiting. If they’re right, they save many cycles. This is called branch prediction. The main way vectorization takes advantage of this is by producing branches that the CPU can very easily predict. Instead of implementing an evaluation of expression horizontally for the whole row at a time, which may have a lot of branches, you implement it vertically for a few columns at a time. This creates tight loops that do a single thing many times. The only branching is within the loop itself, branching back at the beginning many times before ending. The branch predictor will easily guess it’s always doing the same thing and be wrong only for the last iteration when the loop ends. The cycles lost in that last error are negligible overall, compared to a perfect prediction during the whole loop. In the same idea, processors have also been enriched with SIMD (Single Instruction, Multiple Data) instructions, which tell the CPU to execute the same instruction on multiple values successively in memory in the same cycle. This literraly speeds up that simple loop execution by a factor equals to the “width” of the input of the SIMD instruction.

Second, CPUs go a lot faster than they can fetch and save data to main memory, which is why they have local cache embedded in them. Whenever they need to access an area of the main memory that is not in the cache, they have to wait for the data to be retrieved, leading to a similar waste of cycles. Columnar representation and vectorization lead to a much more efficient use of cache, as you can retrieve from the cache just the columns you are working on and keep much better cache locality than when you use a row representation that can be much more scattered and require much more cache miss events.

Kicking off a consensus on the initial requirements

When Parquet started, we were a few people from two companies collaborating. As we made progress, we encouraged others to join and the community grew organically. It was clear that building momentum was important to the success of the project.

To build as much momentum as possible early on, we kicked off this new project with a wide group of people with interest in the topic. They mostly came through the Parquet community and a few connected projects.

We created a set of requirements together and an initial spec. We needed to enable vectorization for fast in-memory processing, have zero copy data transfer, represent nested data for modern query engines and be language agnostic.

While Parquet focuses on efficient storage on disk and fast retrieval, Arrow focuses on fast in memory execution and data exchange. They are both language agnostic and support nested data structures that we made sure to keep compatible.

We decided to spin off the Vector Java code base from the Apache Drill project into its own project and made some amendments to it to match a spec that was the result of a consensus in the group. Arrow was the name we picked to reflect speed. Since that code had already incubated in the Drill project, Arrow started as a top level project in the Apache Software Foundation. Wes McKinney started the C++ implementation straight away with the goal of making Arrow the foundation for Pandas 2.0. From there it made its way into Spark to make use of Pandas UDFs in Spark much more performant.

Fast-forward to today, like Parquet, Arrow is supported in major data warehouses and its adoption grew exponentially.

lessons learned

Bootstrap the community with an initial spec

If building community is like pushing a snowball to gain momentum and reach escape velocity, we might as well start with a snowball as big as possible.

When starting Parquet, we first collaborated between two companies and, then, as others showed interest, we brought them in as equal contributors, slowly growing the community. When starting Arrow we bootstraped this process with a kick-off meeting including a wide range of people interested in the creation of the project to create the initial spec.

If you like exponential curves, they have the unfortunate property of looking extremely flat in the beginning. You need a lot of conviction to reach an inflection point, as it takes a lot of time and effort to get there. Building a standard and growing adoption requires building consensus, hearing every voice, and giving weight to other people’s opinions. That’s how you achieve adoption and influence as a project that, eventually, will take a life of its own.

Find like-minded people who will drive the vision

That being said, the key to maintaining conviction along the way is to find like-minded people who will drive the vision with you. As the saying goes, if you want to go fast (and burn out) go alone, if you want to go far, go together.

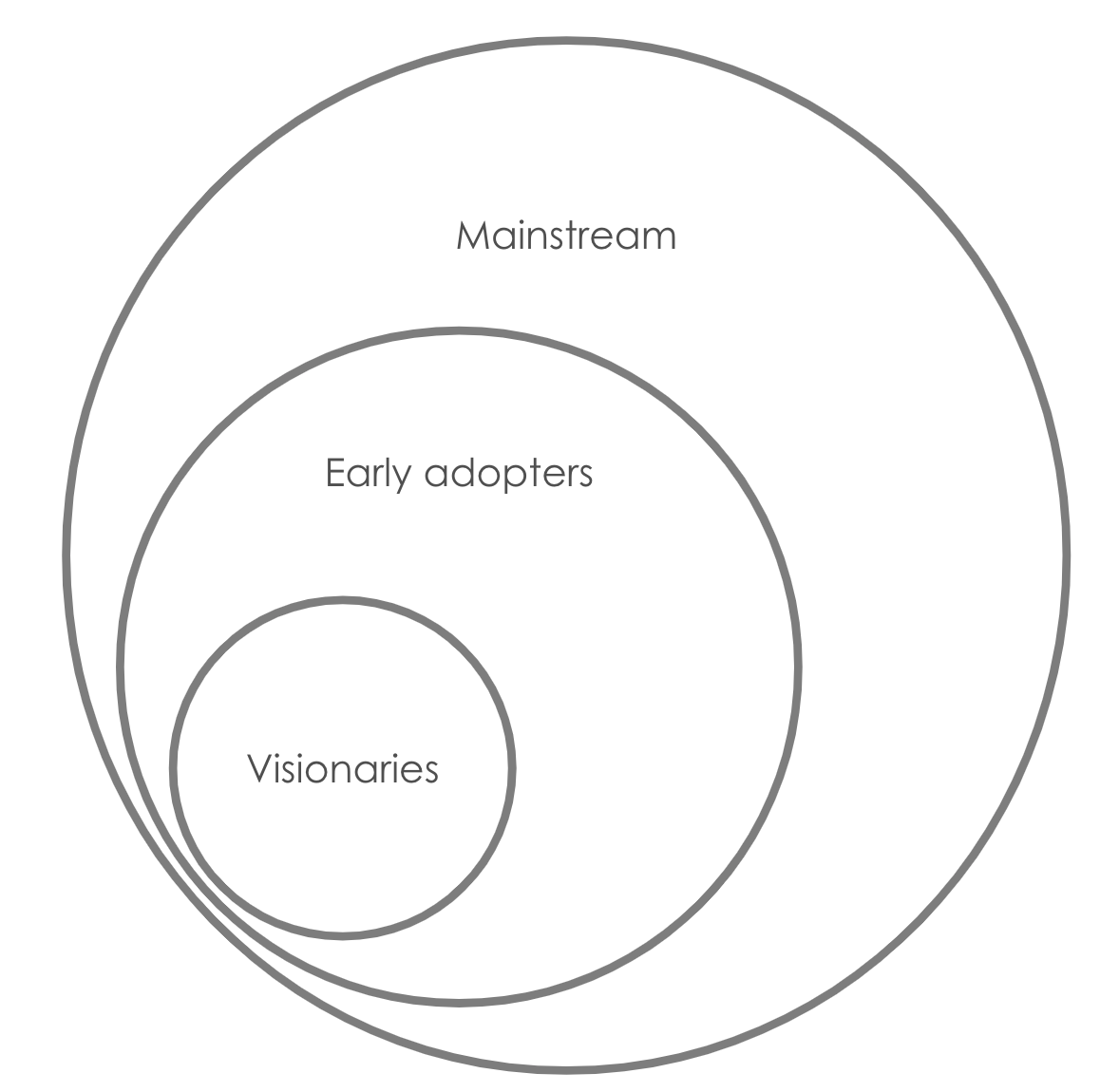

You might wonder how one convinces everyone to use Parquet. Actually, you don’t need to convince everyone of the direction you’re taking. You always have a majority of people who will wait to see if a project is going to be successful before they adopt it. And then, there are the few trailblazers who understand it’s the other way around: that because they adopt the project and because we all put our weight behind it together, the project will be successful. Those visionaries will convince the early adopters and create momentum and, in turn, those early adopters will convince the mainstream users that this is a successful project worth their time and attention.

It’s about the connections we built along the way

Someone once told me: “You’re doing open source the right way”, and I’m pretty sure they meant: “the hard way”. It is true that working with people and building consensus is hard, but it works because of this one weird trick: It’s all about the journey and not the destination. I do this because I enjoy the process and it is extremely rewarding to work with these visionary people, building things together and seeing the impact of our work. This might look like I am giving you a receipe to achieve the goal of building a standard that gets wide adoption. But in reallity, what makes it work is not the desire to achieve that goal; what makes us tick is building relationships and collaborating with amazing people who would not normally work together in the same company. Achieving the goal of adoption is just a byproduct of enjoying the journey.