Chapter III: Onwards, OpenLineage

This is the Third part of my 3-chapter blog post: Ten years of building open source standards

Chapter III: Onwards, OpenLineage

Much the same as there was a common need for a columnar file format and a columnar in-memory representation, there’s a common need for lineage across the data ecosystem. In this chapter, I’m telling the story of how OpenLineage came to be and filled that need.

Building a healthy data ecosystem

When it comes to data dependencies within a single team, usually people find a way to get along well. They are aware of best practices, they understand how they depend on each other: they know how they produce and consume data and they know who to talk to when something’s broken.

As soon as dependencies cross team boundaries, a lot of friction emerges. Dependencies are opaque: you might consume data without knowing where it’s coming from, who produces it and when. You produce data and don’t know how it’s being consumed and by whom.

Because of this opacity, you might have experienced a situation where expectations on a data SLA are way out of sync between the producer and consumer. You might have built a quick proof of concept over a hackathon and suddenly it’s a critical dependency for a production model driving your company revenue. You never signed up for supporting something critical like this and your little experiment doesn’t have any of the reliability attributes required. Now everybody is struggling to reverse engineer a way to make it work without proper planning. These kind of surprises happen all the time and contribute to the overall brittleness of data pipelines. It is really difficult to have visibility beyond the bubble of your close team and it creates all kinds of problems when you attempt to make data pipelines reliable.

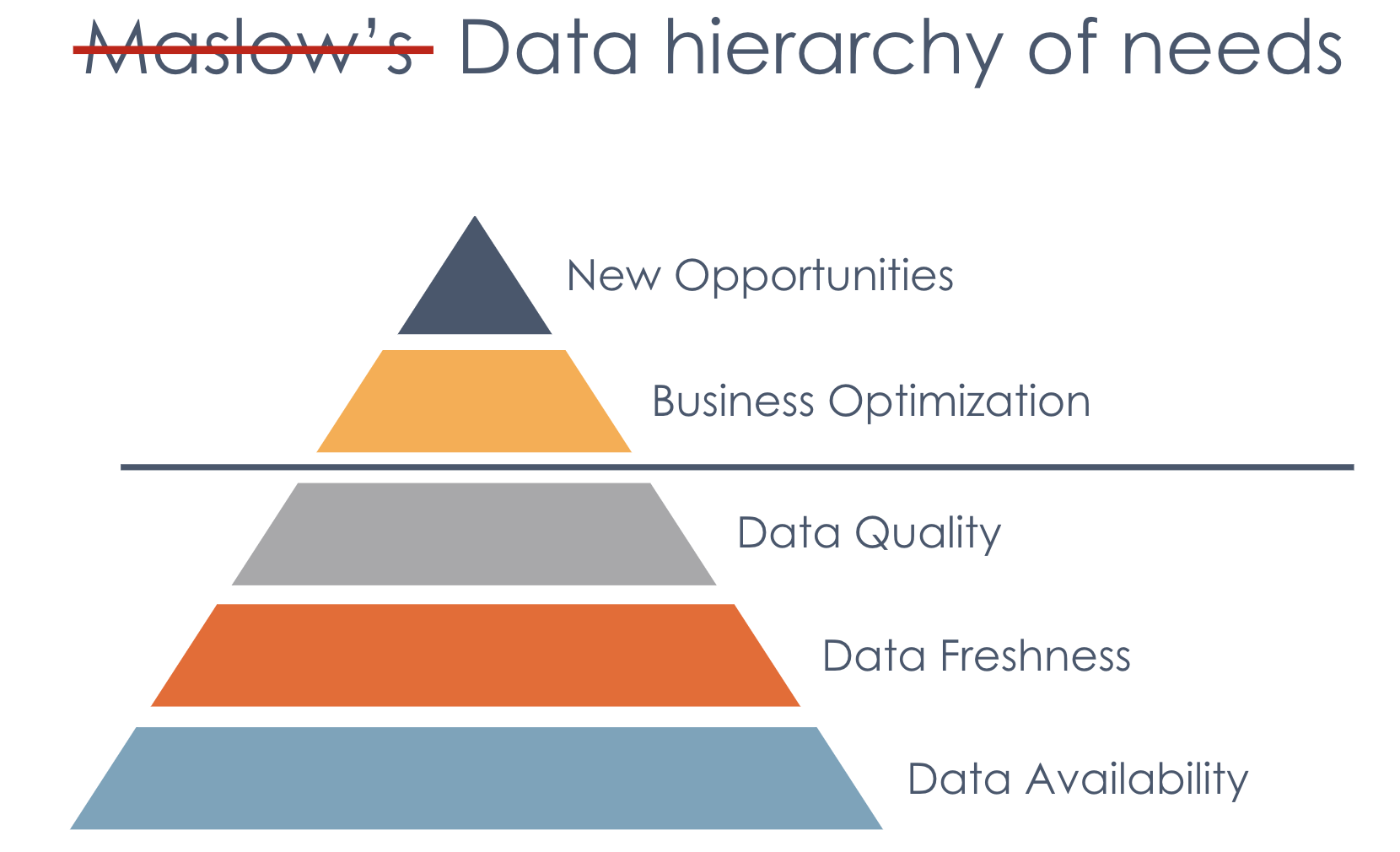

Maslow is famous for proposing a hierarchy of needs. Represented as a pyramid it prioritizes human needs. Before you can achieve happiness, you have more basic needs like food and shelter. Then you’ll be concerned about safety and so on until you can focus on becoming the best version of yourself.

A similar idea applies to data and I took the liberty of repurposing this pyramid into a data hierarchy of needs. Before you can extract value from your data, you need to make sure it is available, up to date and correct. Once those basic needs are attained, we can focus on optimizing our processes and discover new opportunities.

More often than not, we have trouble keeping our head above water and spend most of our time maintaining a minimal level of the basic needs of data reliability. That creates a lot of pain and prevents us from focusing on the added value.

Inception: Marquez

Recognizing this led to the creation of what I saw as the missing piece in the data ecosystem: the automation of collection of operational lineage to create a map of all datasets, the jobs maintaining them and how they depended on each other.

Recognizing this led to the creation of what I saw as the missing piece in the data ecosystem: the automation of collection of operational lineage to create a map of all datasets, the jobs maintaining them and how they depended on each other.

I joined WeWork as the architect for the Data Platform in the hyper growth phase of the pre-IPO times. It is a lot easier to influence the direction of a company when there are more new people by the end of the year than there were people in the company to start with. In a way, I was chasing a similarly stimulating environment to the Twitter pre-IPO days, and I got my wish, except for the IPO.

With Willy Lulciuc, we started from a document describing our goals and as in previous projects, I looked for collaborators in the community. I wanted to make sure this would not be a one off effort and would benefit from a network effect.

We quickly found like-minded people at Stitchfix whose HQ was close by to our office in San Francisco. Juliet Hougland, who led a team there, christened the project following a naming scheme that had been popular for other data projects: Kafka and Camus are named after authors. Gabriel García Márquez won the Nobel Prize in Literature for (among other works) “One Hundred Years of Solitude”, a novel following several generations of the Buendia family following the founding of the fictitious town of Macondo by their elder. A book about lineage, we had our clever project name.

Marquez became this lineage collector and repository; all-knowing of data flows from ETL, through the many transformations that lead to the various use cases we build on top of it. A global map of all the dependencies between teams, through data.

The need for OpenLineage

Following the resounding success of the WeWork IPO [SIC], we embraced the idea of building the tools to solve data observability and started Datakin. We would make the most foundational layers of the data hierarchy of needs easy and help people focus on building value from their data.

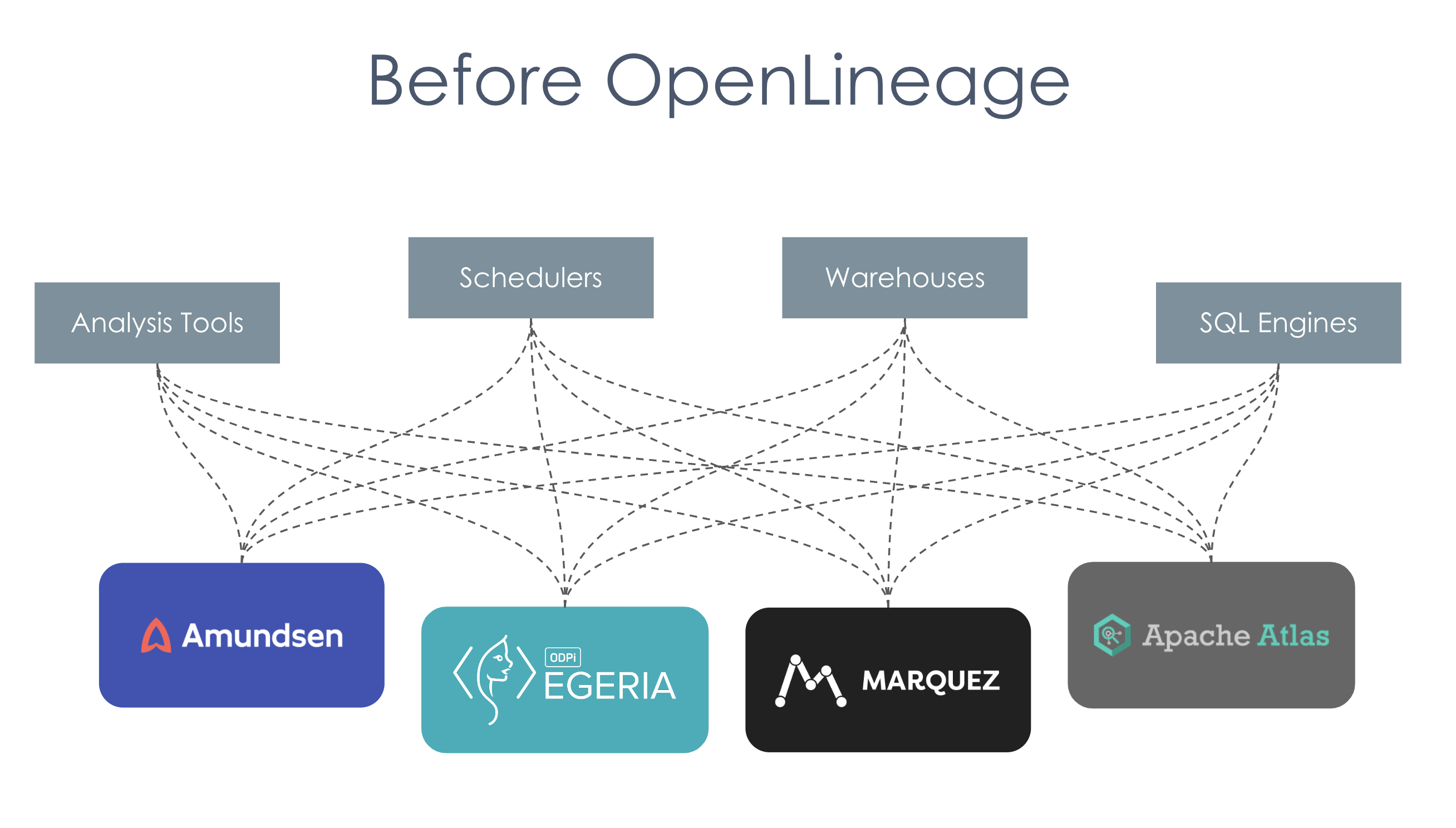

As we were growing the Marquez community and creating relationships with others who cared about lineage, it became clear that everybody was duplicating a great deal of effort and finding it difficult to collect lineage. Worse, as we were trying to improve the situation with Open Source collaboration, there was potential for Marquez to be perceived as a competitor by some. That would have stifled adoption and slowed down our vision of universal data observability.

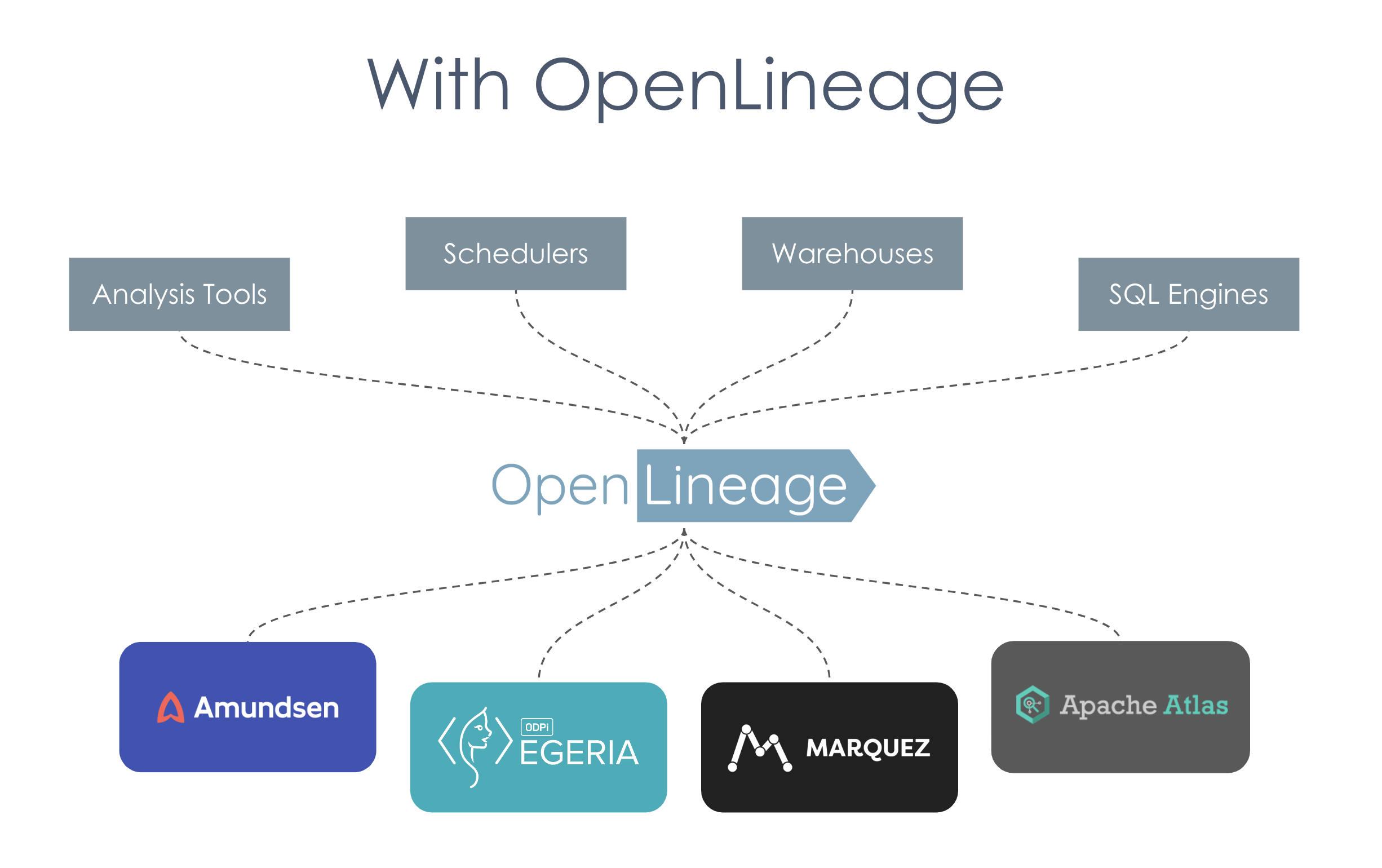

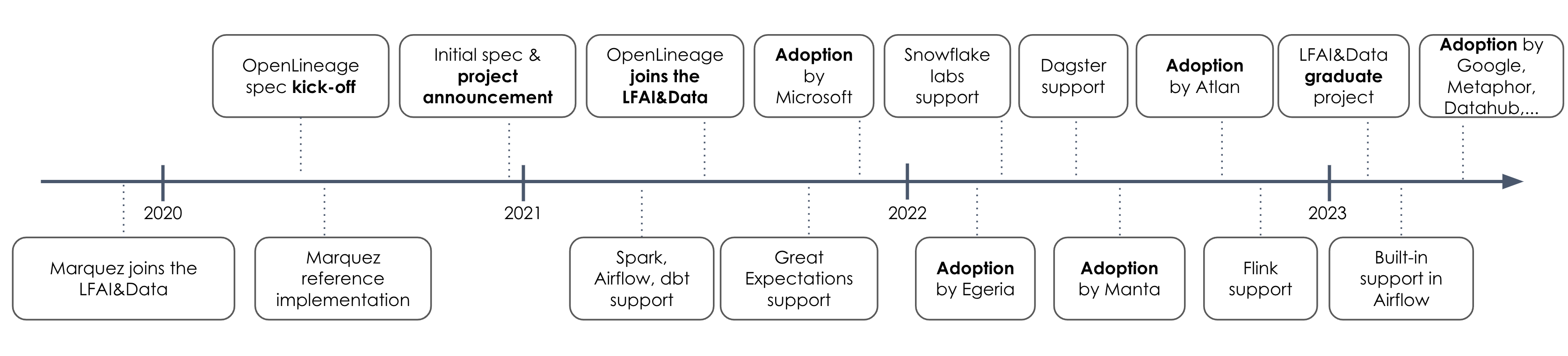

These considerations lead to spinning off OpenLineage out of Marquez. We took a page out of the Arrow play book and organized a kick-off to define the OpenLineage spec from scratch using the learnings of Marquez as well as input from others who cared about lineage in the ecosystem.

The idea was to build an OpenTelemetry for data. As data engineering was catching up to the tooling common in services, we needed a similar project. The instrumentation and collection of operational lineage was the most widely applicable aspect of the Marquez project that everybody benefited from. The new project was also non-threatening to competitors as it didn’t have a storage component and would be a no-brainer to adopt as a source of lineage metadata.

Once the initial spec was approved, we moved the Marquez integrations to OpenLineage and made Marquez its reference implementation. Both of those projects are part of the LFAI&Data, an open source foundation part of the Linux Foundation umbrella, like the CNCF, host of the OpenTelemetry project. The foundation provides oversight and is a strong guarantee of neutrality and transparency in governance.

The OpenLineage spec created a clear boundary between what would be contributed to open source, benefiting everybody and what would go into commercial products. It also made it easy to exchange lineage and the connected metadata. This made it really easy to partner with others and align incentives in the ecosystem. Creating a more focused project boosted growth for both OpenLineage and Marquez.

The model

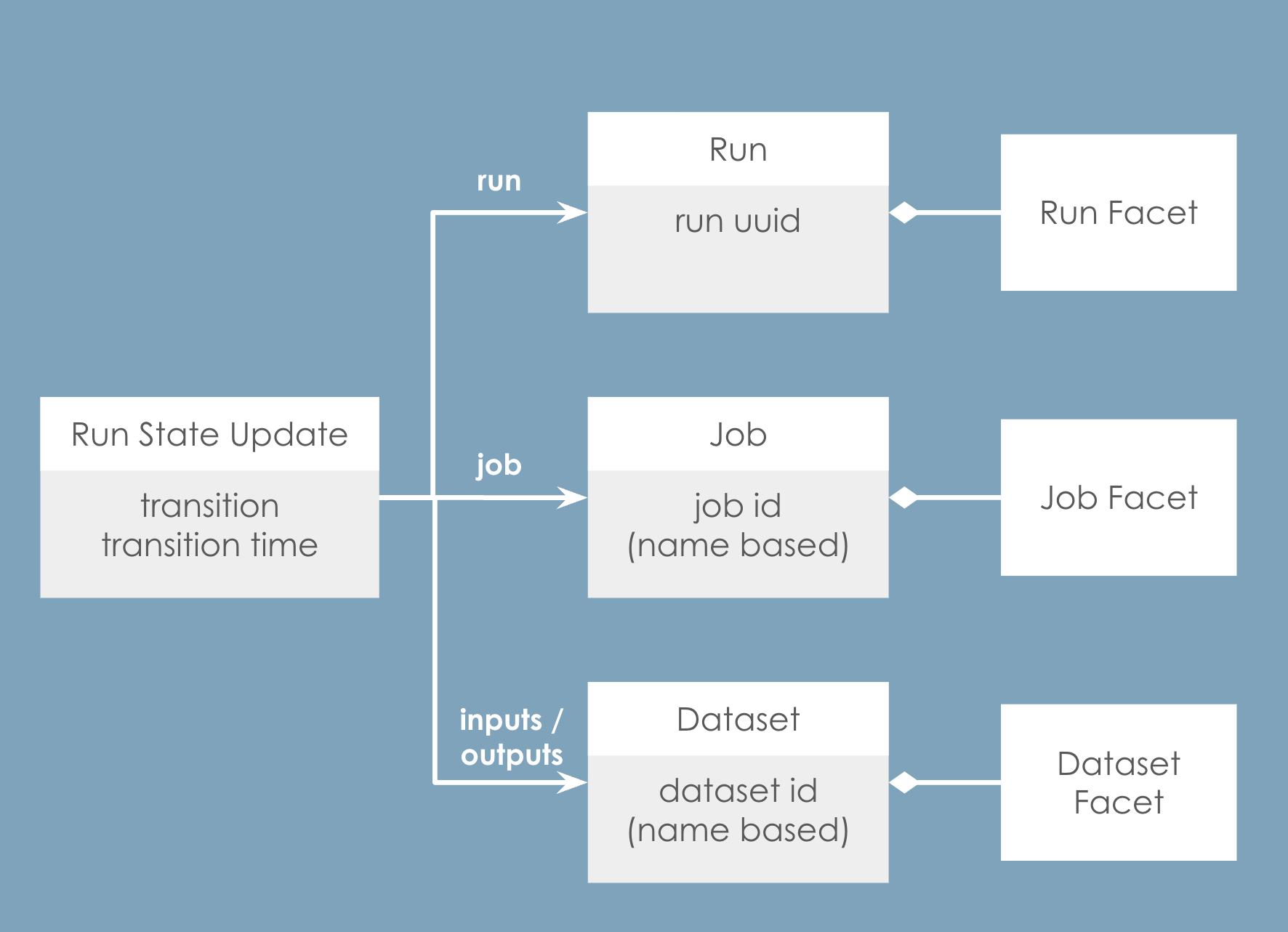

The core OpenLineage model is kept to the simplest expression of lineage while allowing extensibility for various use cases. To start with, it describes runs of jobs consuming and producing data. Jobs can be recurring and have a unique name identifying them. Runs are identified by a UUID correlating START and COMPLETE events. Datasets have a unique name derived from their location that enable stitching together the lineage graph. Job and Dataset events use the same abstractions to represent static lineage.

Jobs, Runs and Datasets have facets that describe specific aspects of metadata and are the mechanism of extensibility and specialization. A database table will have different metadata than a file in a bucket or a topic in a Kafka broker.

Facets are either part of the core spec when they are common enough (dataset schema, source control version, etc) or custom and defined by a third party when they are vendor specific (ex: vendor specific metadata).

This model facilitates building the standard incrementally and decouples many decisions. The initial spec reached consensus fairly rapidly and different sub groups can also quickly reach consensus around specific use cases they care about. For example, people who care about data quality will work on the data quality metrics facet. People who care about compliance will contribute to the column level lineage facet. Having many smaller more focused discussions helps staying agile and build the spec incrementally without getting stuck iterating on a large document.

Additionally, custom facets allow third parties to define their own facets without having to ask permission from anyone.

Just like Parquet and Arrow before, the OpenLineage community and adoption quickly grew. It is a popular source of lineage for Airflow (now built-in!), Spark and dbt among others. Many consumers have adopted it, including Microsoft Purview, Atlan, Datahub, Google Dataplex and many others.

The possibilities are endless

Lineage is a common requirement but it is not a feature in itself. It is a means to an end. Asking people to describe lineage is akin to the “blind men and the elephant” story. If you don’t have the whole picture, depending on your perspective it resembles a snake, a fan, or a tree.

Thankfully, this is a great opportunity. Once you understand why they need lineage and what use there is for it, there is a flurry of products that can be built.

A few examples:

- Data reliability: When something is broken, the source of the problem is always upstream.

- Data privacy: Where is my user private data going? Is it used appropriately?

- Data governance: Can I verify that we use data according to our policies?

- Bank regulations: Can I prove correctness of my reporting to regulators with column level lineage?

- Cost management: The cost of a dataset is the accumulation of its upstream costs. The value is the accumulation of its downstream uses.

Lessons learned

You don’t start a project and then find a way to make it successful

“What makes you think you can do it again?” That’s the question I was once asked, as I was explaining OpenLineage was benefiting from my experience starting Parquet and helping get Arrow off the ground. Fair question. I would not be the first one to toot my past luck as proof of what I could do. That got me thinking.

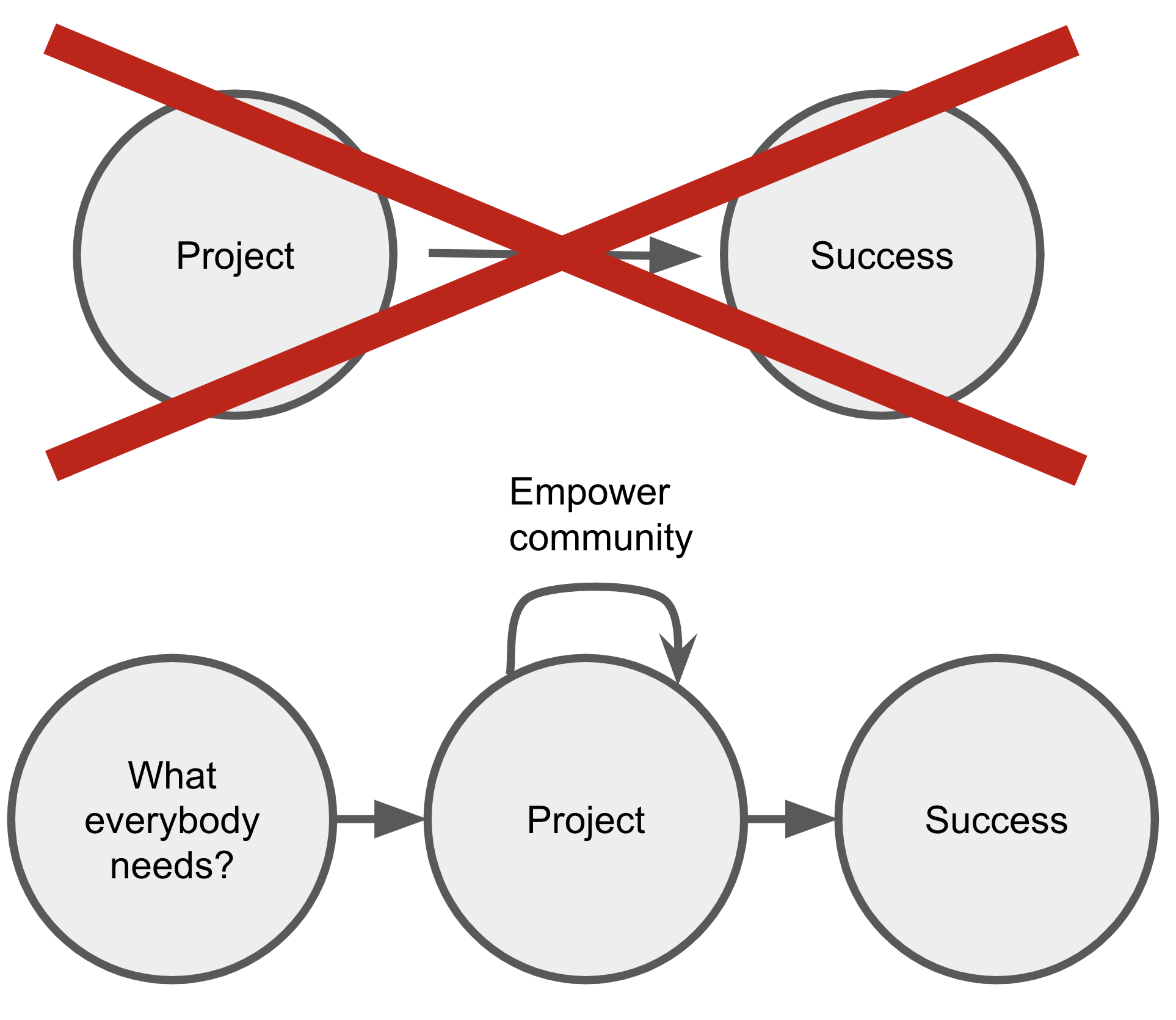

It might look that way but the process does not start with a pet project and then playing some mind tricks to convince everyone to use it. Where it actually start is by finding the missing piece, that need that everybody has, then ask yourself: How do we create a very focused, single minded project to fill that need?

Once you have figured that out, draw the rest of the owl, align everybody’s incentives, and make sure everybody benefits when you make it happen. Empower the community, make sure everybody’s involved and becomes a stakeholder. Make it click for them that when they benefit everybody benefits and that’s why it works.

The Stone Soup

That leads me to the Stone Soup tale. It’s a children’s book that tells the story of a stranger arriving hungry to a town square. Filling a large cooking pot with water and setting it over a fire, he drops a stone at the bottom and starts stirring. To anyone inquiring what he is doing, he affirms he is making a stone soup to share and they are welcome to add their own ingredient. As the first person contributes to that shared soup, more people join and contribute more.

That leads me to the Stone Soup tale. It’s a children’s book that tells the story of a stranger arriving hungry to a town square. Filling a large cooking pot with water and setting it over a fire, he drops a stone at the bottom and starts stirring. To anyone inquiring what he is doing, he affirms he is making a stone soup to share and they are welcome to add their own ingredient. As the first person contributes to that shared soup, more people join and contribute more.

Reading in between the lines, he’s cooking a “soup of nothing” with no ingredient as the stone doesn’t add any flavor. However, just because he creates the environment for sharing, making contributions easy, people start chipping in and a soup is there to share where there was nothing and everybody is better for it.

Mandy Chessell (The lead of the Egeria project and an OpenLineage TSC member) pointed out to me this is exactly what I’m doing and it stuck with me. I’m just stirring the pot, enabling everybody to contribute and be part of a movement.

Align incentives

It’s all about aligning incentives to build a network effect. You might have the impression that I’m trying to boil the ocean. There’s this gigantic task that requires an infinite amount of energy.

But that analogy is not quite right. If I were boiling the ocean of pushing a rock up a mountain, as more people join, we’d slowly make faster progress linearly. We’d boil the ocean a little faster every time someone comes but we’d still be nowhere near solving the problem.

Thankfully, progress is not linear, community growth is exponential because every time someone joins, there’s more incentive for more to join and more advocacy for the project. There’s a network effect that turns people into evangelists who convince more people that it is in everybody’s best interest to be part of the project. The more members in the community, the more they’ll benefits from joining. It’s a chain reaction that can’t be stopped once it’s reached critical mass.

And the magic happens, not because I convince everyone, but because community members talk to each other and create a movement.

Thank you!